Eclipse SparkplugTM is a specification for increasing MQTT interoperability by defining topic and payload contents, and the interaction of devices and monitoring applications. It is being standardized at the Eclipse Foundation. Sparkplug was intended for industrial IoT applications but there is growing interest outside that application area.

There are many resources for Sparkplug which you can find by searching for “MQTT Sparkplug”, but here are a few we’ll be using:

- Eclipse Sparkplug specification project

- Sparkplug Github repository

- Eclipse Tahu Sparkplug client libraries project

- Cirrus Link – MQTT Sparkplug Tahu

The intent of this article is to get you up and running with a working Sparkplug implementation quickly so you can see it in action, not just read the specification. If you do want to read the specification, go to the Sparkplug Github repository, checkout the develop branch and build it according to the README (basically gradlew build). If you want to get a high-level view of Sparkplug first, go to the Conclusion of this article for more links.

We need at least three components in a working Sparkplug setup:

- An MQTT server (or broker)

- A Sparkplug Host Application (server) which can monitor and control edge nodes and devices

- Sparkplug client side implementations which can act as Sparkplug edge nodes and devices

A Sparkplug Device is the network end point for data to be obtained from, and commands sent to. In an industrial IoT environment, such a device may be attached to a PLC by one of a variety of industrial protocols, but probably not MQTT. The PLC can act as a Sparkplug Edge Node, which is a contentrator passing messages to and from devices attached to it. The Edge Node is also a device in that it can also have data sent from it and commands sent to it. The Edge Node is connected to the MQTT server using MQTT, naturally.

For the purposes of getting started quickly, I’m going to assume that all the components are installed and running on the same computer.

MQTT Server (Broker)

If you’re familiar with MQTT, then you probably have an MQTT server already. If not, then look to Eclipse Mosquitto or HiveMQ Community Edition. Mosquitto is written in C and intended to be small, while HiveMQ CE is a Java implementation with a comprehensive administration and monitoring API. Both are open source. Follow the Quick Start instructions in the README in the HiveMQ CE Github repository to get up and running with it. The HiveMQ broker is also used in the Sparkplug TCK which is still under development, so I’m going to use that as an example here.

After following the quick start instructions, you should have a HiveMQ broker running with output something like this:

Sparkplug Host Application

There is much less choice, at the moment, of Sparkplug Host Application than there is for MQTT broker. Once the standardization of Sparkplug is complete I expect many more implementations to surface. For now, I’m going to focus on Inductive Automation’s Ignition platform which you can download here. My experience is installing and running on Linux, so you may have to amend the procedure slightly to run on Windows.

Run the installer you’ve downloaded, choose the install location, use the default set of modules, then I prefer not to run as a service or start immediately. You can then run the Ignition server by switching to the install location and running the Linux command:

sh ignition.sh console

(I assume on Windows it will be just "ignition console")Now you can point a web browser at localhost:8088. It asks you which version to install – I chose the Maker Edition for personal projects. You’ll need to create an Inductive Automation account to get a license. Once you’ve done that you can create a user account to enable you to access the Ignition server. Then I leave the ports at their default configuration. After this you should be able to start the “gateway”.

At this point, you should get the option to “Enable Quick Start”, which, probably as a new user, seems a good idea. So I did that. You’ll need the login credentials you just created.

The next job is to install the MQTT Transmission module for Ignition. There is a video about installing and configuring Transmission. The Transmission module allows Sparkplug messages to be sent to the MQTT broker containing data updates for devices and end points, in this case both simulated.

Once that is done, the Transmission module should be connected to the HiveMQ broker (or whatever MQTT broker you are using) as there is a default connection to tcp://localhost:1883. And data messages from the example configuration should be being sent to that broker.

The Quick Start configuration will have created an example Sparkplug Edge Node, but what we don’t have is a Sparkplug Host Application. For that we need the Ignition MQTT Engine module. There is another video about configuring MQTT Engine. The most important thing to make sure of is that the primary host id is set to the same value in both Transmission and Engine configurations.

The Quick Start configuration includes a device simulator which is publishing simulated data from an OPC UA device. At this point, these data update messages are not reaching the MQTT broker – we need further configuration for that. The data is being sent to a sample Ignition application though. To see that, from Home in the Ignition web console, select “View Projects” then “Launch Project” under “Sample Quick Start Project”. You will be taken to the Quick Start home page. Switch to the “Ignition 101” page to see some animations graphs of the simulated data, which you can explore.

Ignition Designer

To do that, we need another Ignition component – the Designer. On the Ignition web console home page there is a button to download it (in the Build It section).

Download the correct package for your OS, and then install or extract it. On Linux this is just a matter of extracting the tar file contents to a suitable location.

Now launch the Designer. On Linux I find it easiest to switch to the app directory and run the designerlauncher.sh program. This opens the Designer Launcher window, and your installed server should be showing as a box within it. Select that box, and then press the “Open Designer” button.

Use the credentials you created to log in. Then press OPEN on the “Sample Quick Start Project” to take you to the designer. The next steps I learnt from the documentation for Sending OPC Tag Data with Transmission.

- Open the OPC Browser (View->Panels->OPC Browser)

- Expand Devices to see [Sample_Device]

- In the Tag Browser (bottom left hand panel in the Designer) choose “default” in the top list box.

- Drag the [Sample_Device] from the OPC Browser to the Tag Browser left hand column (making sure “default” is still selected).

You should now see the _Sample_Device_ tag folder in the Tag Browser window. If you expand it, it should look like this:

You can delete some of the sub-folders if you like, such as _Controls_. Leave the Ramp folder at least though.

Now, to get the data values into the MQTT broker, we need to go to the Transmission module configuration in the web console.

- Goto MQTT TRANSMISSION Settings -> Transmitters -> Create new Settings

- Create a name for the Transmitter

- The “Tag Provider” is “default”

- Everything else can be left unchanged. Press “Create New Settings”

- Go back to the “General” pane, and “Save Changes”.

The Sparkplug data messages should now be being sent to the MQTT broker. To see the raw data, you can use any MQTT subscriber program or app. To see the details of the messages, we are going to use another Eclipse project, Tahu.

Eclipse Tahu

Get the Tahu project by cloning the git repository:

git clone https://github.com/eclipse/tahu.git

Switch to the develop branch and build:

git checkout develop

mvn clean install

Now switch to the directory where the Sparkplug listener has been built:

cd tools/java_sparkplug_b_listener/target

and run the listener:

java -jar sparkplug_b_listener-0.5.13-SNAPSHOT.jar

Check the version number of the built jar, it may be different for you. It certainly will if you’re reading this after some time has passed!

Now you should see the content of the Sparkplug messages being received. Here is an example:

Message Arrived on Sparkplug topic spBv1.0/Sample Device/NDATA/Ramp

{

"timestamp" : 1642849106212,

"metrics" : [ {

"name" : "Ramp6",

"timestamp" : 1642849104666,

"dataType" : "Double",

"value" : 349.87744

},

... similar entries deleted here for conciseness

{

"name" : "Ramp4",

"timestamp" : 1642849105667,

"dataType" : "Double",

"value" : 364.78933333333333

} ],

"seq" : 123

}You’ll see that the message is being received on topic:

spBv1.0/Sample Device/NDATA/Ramp

The levels of which mean:

- spBv1.0 – a prefix on Sparkplug messages to indicate the version

- Sample Device – a group identifier

- NDATA – message type, in this case data from an edge node (as opposed to a device attached to an edge node which would have DDATA as its message type)

- Ramp – the edge node identifier, which has to be unique within the Sparkplug group

Each NDATA message contains a timestamp, sequence number and an array of metric data values being reported.

Publishing Data from a Device

For this last step in a quick run through of setting up a Sparkplug environment, we are going to use a Tahu utility to publish some data from a device.

We need to edit a couple of parameters in a source file first and then rebuild the project. In the file

sparkplug_b/stand_alone_examples/java/src/main/java/org/eclipse/tahu/SparkplugExample.java

Change the line:

private String clientId = null;

so that the clientId has a value. Indeed “anything” would be fine unless you need to differentiate from a lot of other MQTT clients:

private String clientId = "anything";

Then change:

private long PUBLISH_PERIOD = 60000; // Publish period in milliseconds

to

private long PUBLISH_PERIOD = 2000; // Publish period in milliseconds

so we see the results sooner. Rebuild the project with “mvn clean install”, then switch to the sparkplug_b/stand_alone_examples/java/target directory and run the example:

java -jar sparkplug_b_example-0.5.13-SNAPSHOT.jar

Now we should be publishing some data every two seconds on the topic:

spBv1.0/Sparkplug B Devices/DDATA/Java Sparkplug B Example/SparkplugBExample

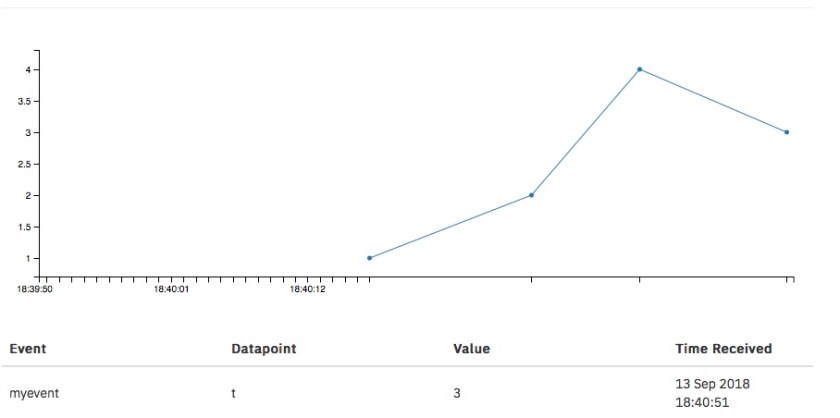

Where the last two levels are the edge node id and device id respectively. Switching back to the Ignition Designer, we can see the results of those messages arriving. In the Tag Browser, switch to the MQTT Engine then expand “Edge Nodes”, “Sparkplug B Devices”, “Java Sparkplug B Example” and “SparkplugBExample”. You should see values from the incoming messages being updated, looking something like this:

Now you would be in a position to take the data from these messages and use it in a dashboard like the “Explore Ignition” in the quick start project, by using the Designer.

Conclusion

This is just a very quick guide to getting a Sparkplug setup going. The Ignition platform has a lot of capability to interface to a wide variety of edge nodes, devices, databases and other systems, as well as flexible UIs. As the Sparkplug specification is formalized and becomes a standard, we expect that other platforms with other focusses will become available too.

There are many other guides and help to continue with understanding and use of Sparkplug. Here are some: